TechGDPR’s review of international data-related stories from press and analytical reports.

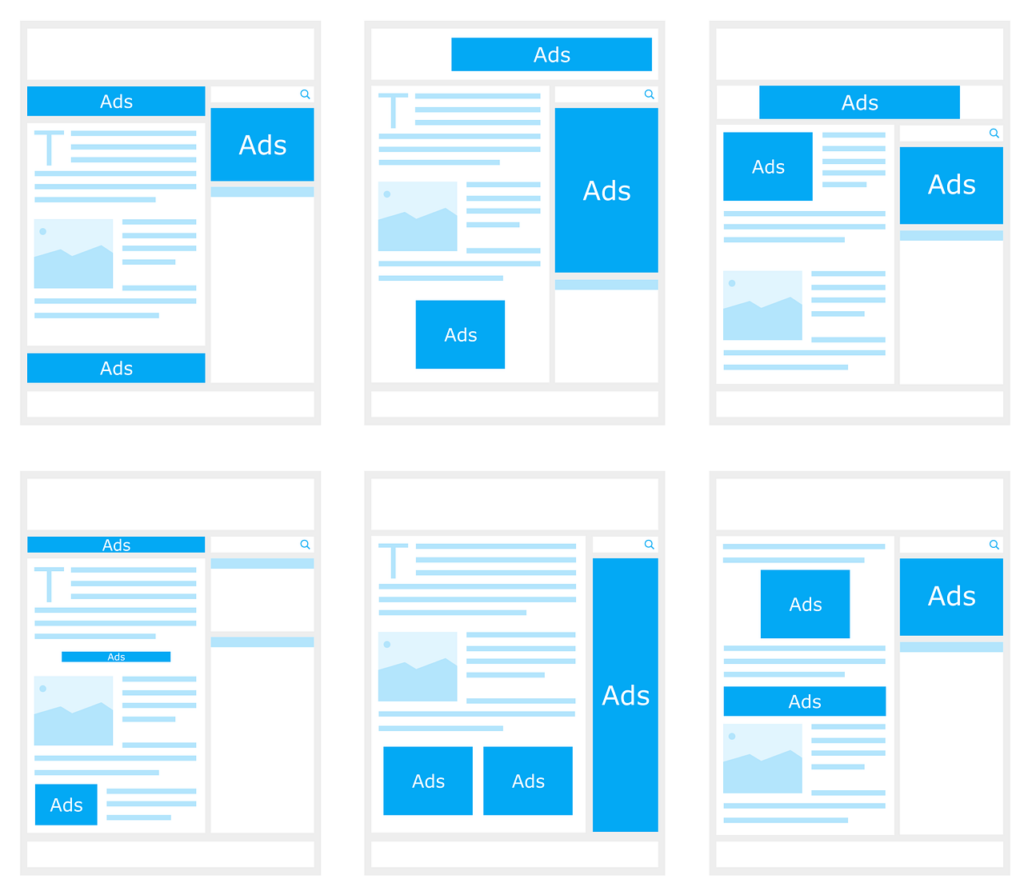

Ad Tech: Meta personalised ads, technical identifier system in App Store, IAB Europe’s consent mechanism

Meta has a few months to reassess the valid legal basis for how Facebook and Instagram use personal data to target advertising in the EU after the media giant was issued fines totaling 390 million euros. It related to a 2018 change in terms of service at Facebook and Instagram following the implementation of the GDPR where Meta sought to rely on the so-called “contract” legal basis for most of its data processing operations. Services would not be accessible if users declined to press the “I agree” button. The final decision states that Meta cannot use a contract as a legal basis for processing data on the grounds that the delivery of personalised ads is not necessary to fulfil Facebook’s contract with its users.

The final decision came under pressure from many privacy regulators in the EU/EEA, (under the one-stop-shop mechanism). In particular, the lead Irish regulator DPC disagreed with a number of counterparts and took the side of Meta that Facebook and Instagram services include, and indeed appear to be premised on, the provision of a personalised service that includes personalised or behavioural advertising. This reality is central to the bargain struck between users and their chosen service provider, and forms part of the contract concluded at the point at which users accept the terms of service. When it became clear that a consensus could not be reached, the regulators referred the dispute to the EDPB who later issued a binding decision.

Finally, the DPC was criticised for not freshly investigating all Facebook and Instagram data processing operations directed by the EDPB in its binding decision. The DPC believes that EDPB does not have a general supervision role akin to national courts in respect of independent national authorities and it is not for the EDPB to instruct and direct an authority to engage in an open-ended investigation. The DPC is now considering bringing an action for annulment before the CJEU in order to set aside the EDPB’s directions.

The French privacy regulator CNIL fined Voodoo, a smartphone game publisher, 3 mln euros for using an essentially technical identifier for advertising without the user’s consent. The investigation showed:

- When Voodoo offers an application on the App Store, Apple provides an ID for vendor technical identifier system, (IDFV), allowing the publisher to track users’ use of its applications.

- An IDFV is assigned for each user and is the same for all applications distributed by the same publisher.

- By combining it with other information from the smartphone, the IDFV tracks people’s browsing habits, including the game categories they prefer, in order to personalise the ads seen by each of them.

- When opening a game application, a first Apple-designed page, (App Tracking Transparency or ATT), is presented to the user in order to obtain their consent to the tracking of their activities on the applications downloaded on their phone.

- When the user refuses the “ATT solicitation”, a second window is presented by Voodoo indicating that advertising tracking has been disabled while specifying that non-personalised advertisements will still be offered.

During its checks, however, the CNIL found that when a user expresses their refusal to be the subject of advertising tracking, Voodoo still reads the technical identifier associated with this user and always processes information related to their browsing habits for advertising purposes, therefore without their consent.

Similarly, the CNIL sanctioned Apple Distribution International with 8 mln euros for not having obtained the consent of French iPhone users, (using App Store), before depositing identifiers used for advertising purposes. Identifiers pursuing several purposes, including for advertisements broadcast, were by default automatically read on the user’s device without obtaining consent.

Meanwhile, the Belgian data protection authority approved IAB Europe’s action plan for its Transparency and Consent Framework – a widely used approach to collecting and managing consent for targeted advertising cookies in the EU. A year ago, a Belgian regulator fined the company 250,000 euros for multiple violations of the GDPR including the absence of a legal basis for processing. The measures proposed in the action plan stem directly from the assumption that:

- The TC String, (a digital marker containing user preferences), should be considered personal data, and

- IAB Europe acts as a (joint) controller for the dissemination of TC Strings and other data processing done by TCF participants.

Both of these assumptions have been referred to the CJEU by the Belgian Market Court for a preliminary ruling, and such a referral was explicitly asked for by the Belgian authority itself in the course of the proceedings.

Legal processes and redress: administrative and civil remedies, data subject access rights

The CJEU has ruled that administrative and civil remedies provided for by the GDPR may be exercised concurrently with and independently of each other. Given that the parallel exercise of administrative and civil remedies could give rise to contradictory decisions, (eg, when the supervisory authority refuses a request from an individual and the latter brings the appeal to the court), a Hungarian court asked the CJEU whether one of those remedies might take priority over the other. The EU top court stipulated that it is for each Member State to ensure, through adopting the procedural rules, that the concurrent and independent remedies provided for by the GDPR do not call into question the effective remedy before a court or tribunal.

The CJEU also confirms a broad definition of data subject access rights, (DSARs): data controllers must reveal the specific recipients of any data they shared unless it is impossible or excessive to do so. The court emphasized that DSARs are necessary to exercise other rights under the GDPR, such as the right to rectification, erasure, and restriction of processing. The related case concerns an individual’s request to a postal and logistical services company to disclose the identity of recipients to whom the company had disclosed, (sold), the individual’s personal data. At the same time, the access right should not adversely affect the rights or freedoms of others, including trade secrets or intellectual property and in particular the copyright protecting the software. However, the result of those considerations should not be a refusal to provide all information to the data subject.

Investigations and enforcement actions: failed data access requests and health-related data consent

The Italian privacy regulator fined I-Model, (promoter and web agency specialised in the selection and management of personnel for events and communication), 10,000 euros for failure to adequately respond to access requests and unlawful processing, Data Guidance reports. After receiving confirmation from I-Model that the personal data in its files had been deleted, the complainant continued to receive job offers from the company. I-Model gave a formal response to the complainant’s requests for deletion of personal data on two occasions, merely stating that it had removed the data from the mailing list, but, in fact, continuing to store and process the data without a legal basis.

The Finnish data protection commissioner fined an unnamed company 122,000 euros for not having consent in accordance with the GDPR to process data on body mass index and maximum oxygen uptake capacity. The company had asked for consent to process health-related data in general but had not specified the data it collected and processed and for what purposes. The disciplinary board paid special attention to the fact that the large-scale processing of health data is a key part of the company’s core business. Importantly, the company’s service is also available in other EU and EEA countries, which is why the issue was discussed in cooperation between supervisory authorities. One of the complaints had been initiated in another Member State.

The Finnish regulator also imposed a penalty of 750,000 euros on the debt collection company Alektum. It had not responded to requests regarding a data subject’s rights. The company also complicated and slowed down the investigation by avoiding the supervisory authority. As a result, several complainants did not get access to their own data and did not have the opportunity, for example, to correct it or monitor the legality of the processing. Any organisation is obliged to respond to requests regarding the rights of the data subject within one month. If there are many requests or they are complex, a data controller can state that it needs an additional time of up to two months. In the case of one complainant, Alektum explained the non-response by saying that it no longer processed the data subject’s personal data. Even then, the company should have responded to the request.

Official guidance: AI supervision and transparency requirements, Privacy by Design as an international standard, EU whistleblowing scheme report

The Norwegian data protection authority has published an experience report on how you can get information about the use of Artificial Intelligence. Transparency requirements related to the development and use of AI are normally divided into three main phases:

- development of the algorithm,

- application of the algorithm,

- post-learning, and improvement of the algorithm.

The GDPR requirements for information are general and basically the same for all phases. But there are also requirements that only become relevant for certain phases. For example, the requirement to inform about the underlying logic of AI will usually only be relevant for the application phase. The full guidance, (in Norwegian), is available here.

In parallel, the Dutch data protection authority is starting a new unit, which should give a boost to the supervision of algorithms. During 2023 it will identify the risks and effects of algorithm use, (cross-sectoral and cross-domain). Where necessary, collaborations will be deepened further with the other supervisors, (eg, on transparency obligations in the various laws, regulations, standards, and frameworks), preventing discrimination and promoting transparency in algorithms that process personal data.

Denmark’s data protection authority looked at the newly approved EU whistleblowing scheme. During the first year of implementation, two out of three reports concerned data protection, (eg, regarding insufficient security of data processing, and monitoring of employees). That is partly because the national data protection authority was mandated to receive and process reports regarding breaches of EU law in a number of areas, including public tenders, product safety, environmental protection, food safety, reports of serious offenses, or other serious matters, including harassment. Nonetheless, many people associate the scheme with data protection only. All cases concluded in 2022 were completed within the deadlines, with an average time frame of 27 days.

Finally, the International Organisation for Standardisation is about to adopt ISO 31700 on Privacy by Design for the protection of consumer products and services. ISO 31700 is designed to be utilised by a whole range of companies — startups, multinational enterprises, and organisations of all sizes. It features 30 requirements and guidance on:

- designing capabilities to enable consumers to enforce their privacy rights,

- assigning relevant roles and authorities,

- providing privacy information to consumers,

- conducting privacy risk assessments,

- designing, establishing, and documenting requirements for privacy controls,

- lifecycle data management, and

- preparing for and managing a data breach.

However, it won’t initially be an obligatory standard.