GDPR coming into effect coincides with the more widespread adoption of artificial intelligence as the technology becomes embedded in more and more enterprise applications. There is a palpable excitement around AI for its potential to revolutionize seemingly every facet of every industry. Studies reveal that 80% of executives believe AI boosts productivity. In the immediate future, execs are looking for AI to alleviate repetitive, menial tasks such as paperwork (82%), scheduling (79%) and timesheets (78%). By 2025, the artificial intelligence market is reported to surpass $100 billion.

Alongside the excitement, there are concerns. Among them, is how to address data privacy and the concern between data privacy and artificial intelligence is most pronounced in the General Data Protection Regulation (GDPR).

The GDPR is designed to protect the privacy of EU citizens and give them more control over their personal data. It aims to establish a new relationship between user and system – one where transparency and a standard of privacy are non-negotiable. Artificial Intelligence (AI) is a set of technologies or systems that allows computers to perform tasks involving a simulation of human intelligence including decision making or learning. In order to do so, the technology or system collects voluminous amounts of data (called Big Data) and namely personal data. AI (especially Machine Learning [ML] algorithms) and Big Data go hand in hand, which has led many to question whether it is possible to use AI while still protecting fundamental personal data protection rights as outlined in GDPR.

Applying the GDPR to machine learning and artificial intelligence

The GDPR–a sprawling piece of legislation–applies to artificial intelligence when it is under development with the help of personal data, and also when it is used to analyze or reach decisions about individuals. GDPR provisions that are squarely aimed at machine learning state “the data subject shall have the right not to be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly significantly affects him or her.” (Article 22 and Recital 71). Also noteworthy are Articles 13 and 15 which state repeatedly that data subjects have a right to “meaningful information about the logic involved” and to “the significance and the envisaged consequences” of automated decision-making.

It is clear that the regulation expects the technologies like AI to be developed while taking into consideration the following principles:

- fairness,

- purpose limitation,

- data minimisation,

- transparency, and

- the right to information.

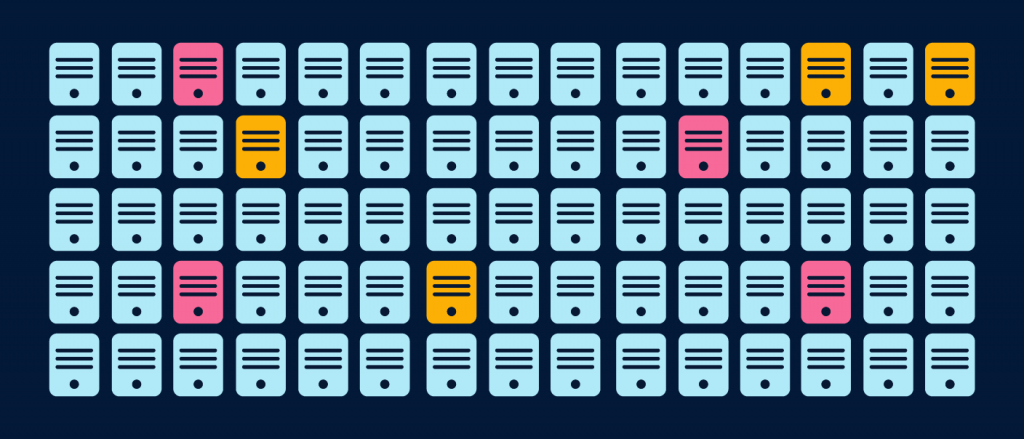

The principles mentioned above are supposedly some of the major challenges facing AI to adapt to the new world of GDPR. The problem is because most of the machine learning decision-making systems are “black boxes” rather than old-style rule-based expert systems, and therefore fail to comply with the GDPR requirements of transparency, accountability, and putting the data subject in control.

Solutions and Recommendations to make Artificial Intelligence GDPR-friendly

Some data sets used to train AI systems have been found to contain inherent biases, which results in decisions that unfairly discriminate against certain individuals or groups. To become GDPR compliant, the design, development and use of AI should ensure that there are no unlawful biases or discrimination. Companies should invest in technical research to identify, address and mitigate biases.

One way to address bias in trained machine learning models is to build transparent models. Organizations should improve AI systems transparency by investing in scientific research on explainable artificial intelligence. They should also make their practices more transparent ensuring individuals are informed appropriately when they are interacting with AI and provide adequate information on the purpose and effects of AI systems.

With respect to data minimisation, the developers should start from carrying out research on possible solutions that use less training data, anonymisation techniques and only solutions that explain how systems process data and how they reach their conclusions.

There is need for privacy-friendly development and use of AI. AI should be designed and developed responsibly by applying the principles of privacy by design and privacy by default.

Organizations should conduct data protection impact assessment at the beginning of an AI project and document the process. A report by the Norwegian Data Protection Authority, “Artificial intelligence and privacy” suggests that the impact assessment should include the following as a minimum:

- a systematic description of the process, its purpose, and which justified interest it protects;

- an assessment of whether the process is necessary and proportional, given its purpose;

- an assessment of the risk that processing involves for people’s rights, including the right to privacy; and

- the identification of the measures selected for managing risk.

Tools and methods for good data protection in Artificial Intelligence

In addition to impact assessment and the documentation of the process to meet the requirements of transparency and accountability, the Norwegian Data Protection Authority report mentioned above includes tools and methods for good data protection in AI. These methods reportedly have not been evaluated in practice, but assessed according to their possible potential. The methods are divided into three categories:

- Methods for reducing the need for training data.

- Methods that uphold data protection without reducing the basic dataset.

- Methods designed to avoid the black box issue.

1. Methods that can help to reduce the need for training data include:

- Generative Adversarial Networks (GANs) have the potential to advance the power of neural networks and their ability to “think” in human ways. It might be an important step towards inventing a form of artificial intelligence that can mimic human behavior, make decisions and perform functions without having a lot of data.

- Federated Learning is a privacy-friendly and flexible approach to machine learning in which data are not collected. In a nutshell, the parts of the algorithms that touch the data are moved to the users’ computers. Users collaboratively help to train a model by using their locally available data to compute model improvements. Instead of sharing their data, users then send only these abstract improvements back to the server.

- Matrix Capsules are a new variant of neural networks, and require less data for learning than what is currently the norm for deep learning.

2. The field of cryptology offers some promising possibilities in the area of protecting privacy without reducing the data basis, including the following methods:

- Differential privacy is the leading technique in computer science to allow for accurate data analysis with formal privacy guarantees. The mechanism used by differential privacy to protect privacy is to add noise to data purposefully (i.e. deliberate errors) so that even if it were possible to recover data about an individual, there would be no way to know whether that information was meaningful or nonsensical. One useful feature of this approach is that even though errors are deliberately introduced into the data, the errors roughly cancel each other out when the data is aggregated.

- Homomorphic encryption can help to enforce GDPR compliance in AI solutions without necessarily constraining progress. It is a crypto system that allows computations to be performed on data whilst it is still encrypted, which means the confidentiality can be maintained without limiting the usage possibilities of the dataset.

- Transfer Learning enables one to train Deep Neural Networks with comparatively little data. It is the reuse of a pre-trained model on a new problem. In other words, in transfer learning, an attempt is made to transfer as much knowledge as possible from the previous task, the model was trained on, to the new task at hand.

3. Methods for avoiding the black box issue include:

- Explainable AI (XAI) plays an important role in achieving fairness, accountability and transparency in machine learning. It is based on the idea that all the automated decisions made should be explicable. In XAI, the artificial intelligence is programmed to describe its purpose, rationale and decision-making process in a way that can be understood by the average person.

- Local Interpretable Model-Agnostic Explanations (LIME) provides an explanation of a decision after it has been made, which means it isn’t a transparent model from start to finish. Its strength lies in the fact that it is model-agnostic which means it can be applied to any model in order to produce explanations for its predictions.

The GDPR requires that technologies like AI and machine learning take privacy concerns into consideration as they are developed. With the GDPR, the road ahead will be bumpy for machine learning, but not impassable. The adoption of the measures and the methods discussed above can help to ensure that AI processes are in line with the regulation. These could also go a long way to achieving accountable AI programs that can explain their actions and reassure users that AI is worthy of their trust.