TechGDPR’s review of international data-related stories from press and analytical reports.

Legal processes and redress

The EU Whistleblowing Directive is due to be implemented into national law by 17 December. It requires all EU Member States to implement legislation obliging all companies with 50 or more workers to put in place appropriate reporting channels to enable those workers to report breaches of EU law and ensure that those making whistleblowing reports are legally protected against retaliation for having done so. Also, businesses with operations across the EU need to monitor implementation and understand local requirements by the data protection authorities, as there will be variations between jurisdictions, (see the implementation tracker country by country from Bird & Bird LLP). Key areas to address will be ensuring that:

- reports are handled by the correct people, in accordance with prescribed timescales and with appropriate security and confidentiality;

- required information is given to the whistleblower and to the person investigated;

- there is guidance and training in place to ensure non-retaliation; and

- there are appropriate retention periods for reports and investigation data.

How could this be implemented in practice, (Germany example provided), involving work councils, internal codes of conducts, reporting options and controls, is provided in an article by Ius Laboris lawyers.

Uber, Deliveroo and a dozen other two-sided online platforms could be hit by draft EU rules for gig workers. They may have to reclassify some of their workers as employees under a new proposal from the EU Commission meant to boost their social rights. The rules apply to ride-hailing, food delivery apps etc, and require companies to provide information to employees on how their algorithms are used to monitor and evaluate them as well as allocation of tasks and setting of fees. Employees can also demand compensation for breaches, Reuters reports. The rules place the burden on online platforms to provide evidence that these regulations do not apply to them. Workers can also challenge their reclassification either via an administrative process or in a court. The draft rules will need to be thrashed out with EU member states and EU lawmakers before they can be adopted, with the Commission estimating a 2025 time frame.

In Germany, the administrative court of Wiesbaden issued a preliminary decision prohibiting RheinMain University from using Cybot A/S’s consent management platform Cookiebot by Usercentrics, DataGuidance reports. In particular, the court found that:

- Cookiebot CMP transfers the complete IP address of the end user to the servers of a cloud company whose headquarters are in the US.

- The end user was identifiable from a combination of a key stored in the user’s browser, which identified the website visitor, and the transferred full IP address.

- This constituted a transfer of personal data to a third country, underlining that this is prohibited in line with the “Schrems II” CJEU judgment.

Even if the corresponding server is possibly located in the EU, the US group has access to it, so that the US Cloud Act with broad query options for US authorities takes effect. Finally, the university did not ask users’ consent for the data transfer, users were not informed about the possible risks associated with the transfer resulting from the US Cloud Act, and the data transfer was not necessary for the operation of the university’s website.

Official guidance

In Austria, a newly approved Code of Conduct, (available in German only), establishes more legal security for insurance brokers and consultants. In particular, the document, (approved by the data protection authority in accordance with Art.40 of the GDPR), finally clarifies the legal status of the insurance broker as the data controller, who acts independently in the interests of the customer and is not subject to any data protection instructions from an insurance company. In addition, there is now clarity about the justification for data processing with regard to “simple” and “special” categories of personal data. An advantage for all those who want to officially adhere to the Code of Conduct is an objective external monitoring body entrusted with checking compliance.

Data breaches, investigations and enforcement actions

The Dutch data protection authority, AP, imposed a fine of 2.75 mln euros on the tax authorities. For years the tax administration has processed the dual nationality of applicants for childcare allowance in an unlawful, discriminatory and improper manner. The dual nationality of Dutch nationals does not play a role in assessing an application for childcare allowance. Nevertheless, the tax administration kept and used this information. In addition, the tax authorities processed the nationality of applicants indicators to combat organised crime using a system that automatically designated certain applications as high-risk. The data was not necessary for those purposes, and the administration should have deleted the data according to GDPR data minimisation principles. In 2018 the tax administration stopped using these indicators, and by 2020 the dual nationalities of Dutch people were completely removed from its systems.

The UK Information Commissioner’s Office, the ICO, hit broadband ISP and TV operator Virgin Media with a 50,000 pound fine after it sent nearly half a million direct marketing emails to people who had previously opted out. In August 2020 the regulator received a complaint from one of the operator’s customers about the unsolicited email. The message itself took the form of a price notification and attempted to get the customer to opt back into marketing communications. However just one customer complained to the ICO about receiving the spam – but that was enough to spur the regulator into investigating. Even though 6,500 customers decided to opt back into receiving marketing emails as a result of the mailshot, the ICO said this wasn’t enough to ignore UK regulation of Privacy and Electronic Communications. “The fact that Virgin Media had the potential for financial gain from its breach of the regulation, (by signing up more clients to direct marketing), is an aggravating factor”, the ICO stated.

The Norwegian data protection authority, Datatilsynet, has punished the Government Pension Fund, (SPK), with an infringement fee of 99,000 euros. The SPK has collected unnecessary income information about approx. 24,000 people. SPK had obtained income information from the tax administration since 2016. They themselves revealed that part of the information was data that should not have been collected, as it was not necessary for post-settlement disability benefits. The information was obtained through a predefined data set from the tax authority. Until 2019, SPK did not have routines for reviewing and deleting the surplus information that was collected, violating basic principles for data processing including special categories of personal information.

Artificial Intelligence

More and more companies will become engaged in developing and building AI systems but also in using already deployed AI systems. Therefore, potentially all companies will need to deal with the underlying legal issues to ensure accountability for AI systems sooner or later, says analysis by Bird and Bird LLP. One of these accountability requirements will often be the need to conduct a Data Protection Impact Assessment. DPIAs for AI systems deviate from similar assessments relating to the development and deployment of common software, which results from some peculiarities lying in the inherent nature of AI systems and how they work. The main points to consider are:

- Distinguishing between DPIAs for AI system development/enhancement (eg, training the algorithm) and for AI system deployment for productive use (eg, CVs of candidates are rejected based on the historical data fed into an algorithm).

- Taking a precise, technology-neutral approach to catching the essential characteristics of AI, (eg, systems with the goal of resembling intelligent behaviour by using methods of reasoning, learning, perception, prediction, planning or control).

The most important aspects of DPIAs for AI systems development/enhancement should include: controllership, purpose limitation, purpose alteration, necessity, statistical accuracy, data minimization, transparency, Individual rights, and data security risk assessment. Data controllers (providers of the AI system or the customers that deployed it) may also voluntarily decide to conduct DPIAs as an appropriate measure to strengthen their accountability, safeguarding the data subject’s rights. This may ultimately help to also win customer trust and maintain a competitive edge.

Opinion

The Guardian publishes thoughts by a former co-leader of Google’s Ethical AI team Timnit Gebru:

“When people ask what regulations need to be in place to safeguard us from the unsafe uses of AI we’ve been seeing, I always start with labor protections and antitrust measures. I can tell that some people find that answer disappointing – perhaps because they expect me to mention regulations specific to the technology itself.” In her opinion, the incentive structure must be changed to prioritize citizens’ well-being. To achieve that, “an independent source of government funding to nourish independent AI research institutes is needed, that can be alternatives to the hugely concentrated power of a few large tech companies and the elite universities closely intertwined with them.”

Individual rights

Monitoring of workers’ personal data via entrance control systems – is featured by the Social Europe website. In tracking entrance to and exit from the workplace and ensuring its safety, electronic control systems, in which limited and non-sensitive data belonging to workers are uploaded, will be more in compliance with legal instruments than biometric systems. Biometric entrance-control systems should therefore be a last resort and limited to access to exceptional areas which require high security or in particular areas where highly confidential information is kept. As the article sums up, the EU’s GDPR does not directly regulate the monitoring of workers by electronic and biometric entrance-control systems. The provisions of such monitoring can be found in specific national legislation, but also in Council of Europe’s Recommendation CM/Rec (2015)5, on the processing of personal data in the context of employment, and Opinion 2/2017 of the Article 29 Working Party.

Data security

How do Sim Swapping attacks work and what can you do to protect yourself? The European Union Agency for Cybersecurity, ENISA, has taken a technical deep dive into the subject. Since 2017 such attacks have usually targeted banking transactions, but not exclusively. They also hack the cryptocurrency community, social media and email accounts. In a SIM swapping attack, the attacker will convince the telecom provider to do the SIM swap, using social engineering techniques, pretending to be the real customer, claiming that the original SIM card is for example damaged or lost. Specific circumstances may open the opportunity for attackers, which can be:

- Weak customer authentication processes;

- Negligence or lack of cyber training or hygiene;

- Lack of risk awareness.

More information for the public is available in the ENISA Leaflet “How to Avoid SIM-Swapping”.

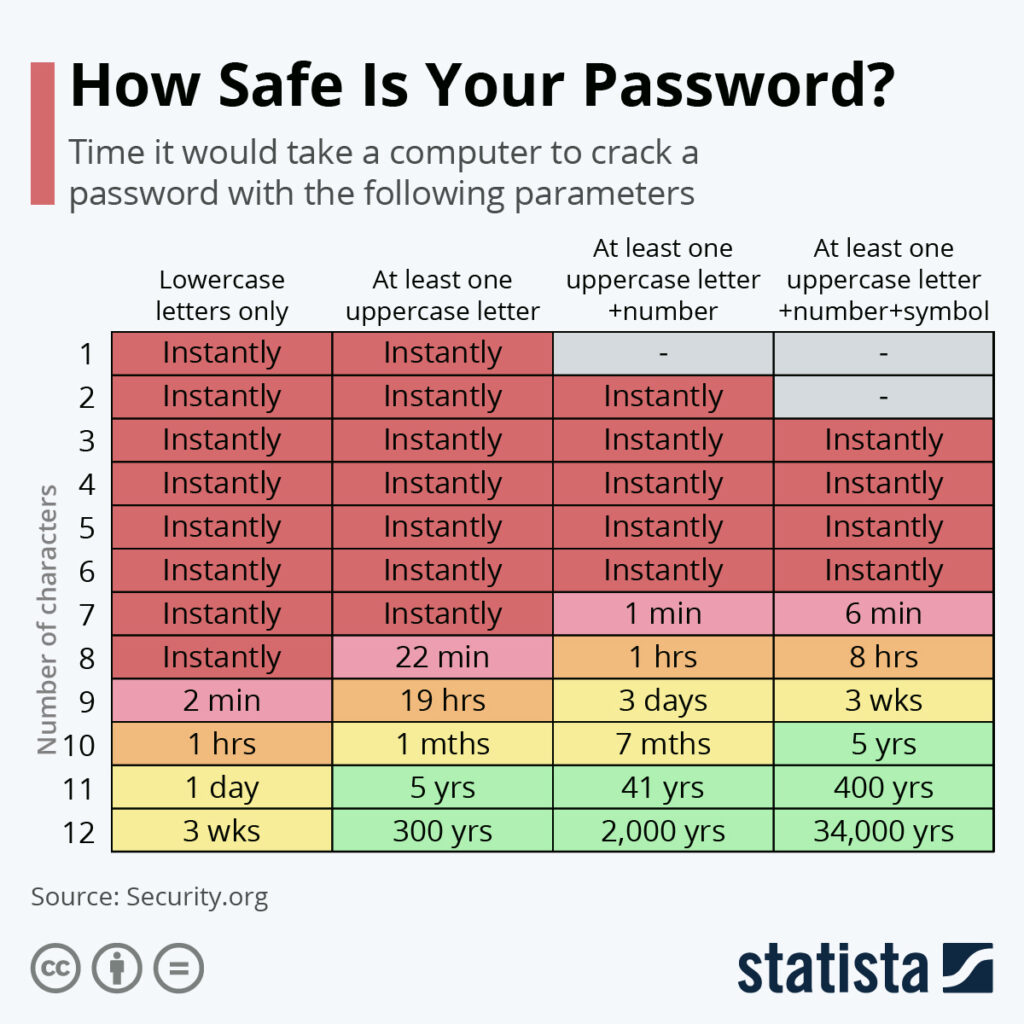

How long would it take a computer to hack your exact password? The latest chart by Statista website illustrates that a password of 8 standard letters contains 209 billion possible combinations, but a computer is able to calculate this instantly. Adding one upper case letter to a password dramatically alters a computer’s potential to crack a password, extending it to 22 minutes. Having a long mix of upper and lower case letters, symbols and numbers is the best way to make your password more secure. A 12-character password containing at least one upper case letter, one symbol and one number would take 34,000 years for a computer to crack.

Big Tech

Twitter is reviewing a controversial policy that penalizes users who share images of other users without their consent, The Guardian reports. The company has launched an internal review of the policy after making several errors in its enforcement. The platform now allows users to report other users who tweet “private media that is not available elsewhere online as a tool to harass, intimidate, and reveal the identities of individuals”. If a review concludes the complaint has merit and the image wasn’t used for a journalistic or public interest purpose, those accounts are deactivated. Some activists say the broad nature of the new rules makes them ineffective and ripe for abuse against the most vulnerable groups, while some reporters, photographers and journalists are concerned that they do not take into account unreasonable expectation of privacy in public spaces, and would undermine “the ability to report newsworthy events by creating nonexistent privacy rights”.

A Virginia federal court granted Microsoft’s request to seize 42 US-based websites run by a Chinese hacking group, IAPP reports. Microsoft, which has been tracking the hacker group known as Nickel since 2016, is redirecting the websites’ traffic to secure Microsoft servers to “protect existing and future victims.” Microsoft’s Corporate VP of Customer Security and Trust said Nickel targeted organizations in 29 countries, using collected data “for intelligence gathering from government agencies, think tanks, universities and human rights organizations.”

Several Amazon services – including its website, Prime Video and applications that use Amazon Web Services (AWS) – went down last week for thousands of users in the US and EU. Amazon’s Ring security cameras, mobile banking app Chime and robot vacuum cleaner maker iRobot were also facing difficulties. Amazon said the outage was probably due to problems related to application programming interface, which is a set of protocols for building and integrating application software. The huge trail of damage from a network problem came from a single region “US-EAST-1” and underscored how difficult it is for companies to spread their cloud computing around, Reuters reports. With 24% of the overall market, according to research firm IDC, Amazon is the world’s biggest cloud computing firm. Rivals like Microsoft, Alphabet’s Google and Oracle are trying to lure AWS customers to use parts of their clouds, often as a backup.

Russia blocks popular privacy service Tor, ratcheting up internet control, Reuters reports. Russia has exerted increasing pressure on foreign tech companies this year over content shared on their platforms and has also targeted virtual private networks, (VPN), and other online tools. The Tor anonymity network is used to hide computer IP addresses to conceal the identity of an internet user. Tor also allows users to access the so-called “dark web”. Tor, which says its mission is to advance human rights and freedoms, has more than 300,000 users in Russia, or 14% of all daily users, second only to the US.

Recently uncovered software flaw could be “most critical vulnerability of the last decade”, the Guardian reports. The problem is in “Log4Shell”, which was uncovered in an open-source logging tool in Apache software ubiquitous in websites and web services. The flaw was reported to Apache by AliBaba on November 24th, and disclosed by Apache on December 9th. Reportedly it allows hackers password-free access to internal systems and databases. The open source logging tool is a standard kit for cloud servers, enterprise software, and across business and government. Few computer skills are needed to steal or obliterate data, or install malware by exploiting the bug. It will be days before the full extent of damage is known.