TechGDPR’s review of international data-related stories from press and analytical reports.

Legal processes: US hosting provider in the EU, Google Fonts, IAB Europe, California Age-Appropriate Design Code, new Swiss privacy law

In Germany, a public procurement chamber’s decision to ban hospitals’ digital discharge management to store data in Luxembourg was overturned by a higher regional court. Two public hospitals in Baden-Württemberg agreed to send data to a Luxembourg branch of a US hosting provider. The Karlsruhe court, however, overturned the decision that the use of the services of the Luxembourg subsidiary of a US company would be accompanied by an inadmissible data transfer to a third country: “The latent risk of access by government and private bodies outside the European Union, (here the US), is sufficient for this assumption.” In this case specific guarantees offered by one of the bidders to store and process data only in Germany convinced the court sufficient safeguards would apply. This decision is final.

According to TechnikNews, Google Fonts are presently the subject of a veritable tsunami of GDPR claims in Austria and Germany. Many website owners have received letters and emails from data protection lawyers informing them of data breaches and requesting a “settlement.” Without the customer’s permission, the website operator is said to have “forwarded the client to a business of the US Alphabet Inc.”, (owners of Google). The claims could potentially refer to one Munich Regional Court decision that supported a lawsuit in the “Google Fonts” issue. Google sees it differently, according to its own privacy policy: although a request from the user’s browser to Google takes place, IP addresses are not protocoled. Furthermore, “the use of the Google Fonts API is not authenticated and the Google Fonts API does not set any cookies or protocol them.” See more technical analysis in the original publication.

The Belgian Market court referred the IAB Europe Ruling on the Transparency & Consent Framework to the CJEU. In an interim ruling, the court has decided to refer preliminary questions to the CJEU on how the concept of data controllership in the GDPR as it pertains to this ruling, is to be interpreted and on whether a TC String, (a digital signal containing user preferences), can be considered as “personal data” under the GDPR. The referral to the EU top court means a final judgment is unlikely until 2023. IAB Europe disputes the initial decision by the Belgian supervisory authority APD, that it acts as a controller for the recording of TC Strings and as a joint controller for the dissemination of TC Strings and other data processing done by TCF vendors under the OpenRTB protocol. It also challenges the APD’s assessments on the validity of legal bases established by the TCF, which were done in the abstract, without reference to the particular circumstances surrounding the data processing.

California approved its own version of the Age-Appropriate Design Code. If the state governor signs the bipartisan bill, (AB-2273), into law, online services that violate its provisions could face fines as high as 7,500 dollars per affected child. This includes social media, the gaming industry and other online services likely to be accessed by children under age 18. They shall take all of the following actions:

- complete and implement a DPIA,

- estimate the age of child users with a reasonable level of certainty, or

- apply the privacy and data protections afforded to children to all consumers and configure all default settings,

- provide any privacy information, using language suited for the age group,

- do not use the child’s data in a way that the business knows, or has reason to know, is materially detrimental to their physical or mental health, or well-being,

- do not profile a child by default, (unless sufficient safeguards are in place, or it is necessary for the performance of the contract and is in the best interest of the child),

- do not collect, sell, share, or retain personal information that is not necessary to provide an online service, product, or feature with which a child is actively and knowingly engaged, etc.

In Switzerland, a new data protection law will enter into force on 1 September 2023, according to the recent decision of the Federal Council. The one-year grace period leaves sufficient time for the economic community to implement the new law. The reviewed legislation is adapted to technological advances and the rights of individuals vis-à-vis their data, as well as transparency on how it is collected. Some private data controllers are relieved of certain obligations relating to the duty to inform when personal data is communicated. The modalities of the right of access are simplified thanks to the removal of the obligation to document the reasons for refusing, restricting or deferring disclosure. The data security requirements are reinforced, (eg, a one-year retention period for data processing logging records), due to critical feedback during the consultation period. Swiss legislators claim the new modernized law guarantees adequate privacy levels and safe cross-border transfers. The EU has recognised Switzerland’s level of data protection since 2000. This recognition is currently being reviewed.

Official guidance: PETs, GDPR implementation, secondary use of health data, decentralised AI, employees’ digital activities, token access, privacy notice

The UK ICO issued draft guidance on Privacy-enhancing technologies, (PETs). These could be software and hardware solutions, methods or knowledge to achieve specific privacy or data protection functionalities or to protect against risks to the privacy of an individual or a group of natural persons. The guide answers questions on:

- How can PETs help with data protection compliance? (data protection by design and by default, data minimisation, robust anonymisation or pseudonymisation solutions)

- What are the different types of PETs? (derive or generate data that reduces or removes the identifiability of individuals, hide or shield data, split datasets or control access to certain parts of the data, etc)

- A detailed description of some PETs, their residual risks, and implementation considerations with practical examples, (Homomorphic encryption, Secure multiparty computation, Private set intersection, Federated learning, Trusted execution environments, Zero-knowledge proofs, Differential privacy, Synthetic data, Reference table).

The Dutch Ministry of Justice published a review of the implementation of the GDPR at the national level. The GDPR is based on open standards, such as necessity and proportionality. The experts recommend the concretisation and specific interpretation of those standards, special sectorial legislation, codes of conduct and guidelines for the practice of data protection law. After studying some cases, the researchers could not clearly understand how the country’s data protection regulator AP determines the size of fines. Therefore a more transparent method of setting and imposing penalties can lead to greater understanding and acceptance by the organisations under supervision. The investigation also raises issue with the obligation to report data breaches and lack of enforcement capacity by AP in the case of unreported data breaches.

A Polish law blog is looking at the secondary use of electronic health data in the EU. The draft Regulation on the European Health Data Space allows for certain reuse of both personal and non-personal health data collected in the context of primary use. Apart from public interest or statistical and scientific purposes, the advanced purposes include training, testing, and evaluation of algorithms, including in medical devices, AI systems, or digital health applications. Some categories of data are described in general terms, which would allow new types of data to be included in these categories and in the future may include :

- “electronic data related to insurance status, professional status, education, lifestyle, wellness and behavioural data relevant to health”, or

- “data impacting on health, including social, environmental or behavioural determinants of health.”

Additionally, national health data access bodies will be able to grant access to additional categories of electronic health data entrusted to them by the national laws or based on voluntary cooperation with data holders, ( the “data altruism” principle as per the EU’s Data Governance Act). At the same time, the processing of such electronic data must avoid risks, (eg, insurance exclusion, targeted advertising, access to data by third parties, unauthorised medical products or services), causing harm to natural persons.

The Swedish privacy agency IMY is starting a pilot project to create in-depth legal guidance in matters relating to decentralised AI. IMY’s pilot project is being carried out with Sahlgrenska University Hospital and Region Halland. The project is part of a larger strategic initiative led by AI Sweden: information-driven care where AI helps to tailor decisions at the individual and system level and develop more advanced and accurate diagnoses and treatments. Decentralised AI is a way to avoid collecting large amounts of data to train algorithms centrally and instead produce models that are trained locally. The trained algorithms are then returned to a central point where insights are aggregated.

Is the boss watching you? The Norwegian data protection authority Datatilsynet issued an in-depth monitoring and control of employees’ digital activities report. It states that:

- More than half of employees have an insufficient overview of what information the employer collects, (digital work tools record such large amounts of information that it can be challenging for employees to keep track).

- The employer has the opportunity to collect large amounts of information about employees’ digital activities, (eg, Google, Microsoft and Zoom have built-in additional functions that allow the employer to monitor the employee’s activities).

- Software designed to monitor employees can be very intrusive.

- Several employees see signs that the employer monitors visits to websites, or access to e-mail or PC/screen recording, activity log, audio recording, and GPS tracking.

- The spread of monitoring tools aimed at employees who work from home, (while Portugal has already prohibited remote worker monitoring).

To help employers comply with the privacy regulations when performing worker monitoring, which legal basis or software to choose from, and notorious infringement cases, see the original guidance, (in Norwegian).

The French supervisory authority CNIL has published a guide on individual login tokens or token access. A mechanism frequently integrated into authentication procedures, it allows a secure connection to a personal space, an account or office documents. In addition, tokens are often used in a two-factor authentication procedure to reduce the risk of account spoofing. An access token materialized as a link can be considered continuous access to personal data accessible from the Internet. This “gateway” is a vulnerability whose security risk is exploitable by malicious actors. Certain principles can reduce the likelihood that this will occur:

- Log the creation and use of tokens and define a purpose-based validity period.

- Generate an authentication link that contains no personal data or variables with easily understandable and reusable content, such as hashed content.

- Impose a new authentication if the token allows access to personal data or if the token has an insufficiently limited lifespan.

- Limit the number of accesses such as single or temporary use depending on the intended purposes.

- In the context of a data transfer between two services, using an access token to establish the connection between the two services must also be limited in time.

- Restrict the use of the token to certain services or resources by avoiding its reuse.

- Automatically delete, temporarily or permanently, access to the requested resource in case of suspicious intensive requests.

- Users should be able to choose how to transmit their remote access token, (email, SMS, postal sending, phone call).

The Latvian data protection authority DVI published a simple yet essential reminder of what is a privacy notice. The first step in controlling personal data is awareness of the organisation’s planned activities. Even before starting data processing, the organisation must provide information, and the person whose data they intend to process has the right to get acquainted with:

- information about the organisation and its contact information;

- the data protection specialist and their contact information;

- purposes and legal basis for obtaining the personal information;

- if the processing is based on legitimate interests, a description of these interests;

- recipients of personal data, their categories, if any;

- a reference to how personal data will be protected in case of transfer to a third country or an international organisation;

- the period for which the information will be stored or, if this is not possible, how this period will be determined;

- on the exercise of other personal rights – access to personal data, its correction or deletion, restriction of processing, the right to object, the right to data portability;

- if the processing is based on consent – the right to withdraw it at any time, and how this will affect the lawfulness of processing before withdrawal;

- the right to submit a complaint to the supervisory authority;

- whether the provision of personal data is required by law or a contract;

- whether it is a prerequisite for concluding a contract;

- whether the person is obliged to provide personal data and what the consequences may be in cases where such data are not provided;

- there is automated decision-making, including profiling – meaningful information about the logic involved, as well as consequences of such processing to the person.

Investigations and enforcement actions: Instagram fine, Sephora settlement, research data processing, worker video and audio surveillance, costs of data protection

Ireland’s data protection commissioner will fine Instagram 405 million euros for breaking the GDPR by improperly handling the data of youngsters using the platform. The parent company of Instagram, Meta, has already declared that it will appeal against the ruling. Although it may seem like a sizable figure, it is not the largest fine a corporation has ever been required to pay under the GDPR. The inquiry, which began in 2020, concentrated on young users between the ages of 13 and 17 who had access to business accounts, which made it easier for the user’s phone number and/or email address to be made public. Instagram unveiled additional measures to keep teenagers safe and secure after updating its settings over a year ago.

The California Consumer Privacy Act’s first initial enforcement agreement: French cosmetics company Sephora will pay a fine of 1.2 million dollars and adhere to several compliance requirements. According to the attorney general, Sephora violated several laws by failing to inform customers that it was selling their personal information, only honoring user requests to opt out via user-enabled global privacy controls, and failing to remedy these violations within the allotted 30-day period. Sale in this case means Sephora disclosed or made available consumers’ data to third parties, (ad networks and analytics companies), through the use of online tracking technologies such as pixels, web beacons, software developer kits, third-party libraries, and cookies, in exchange for monetary or other valuable consideration. The case also signals a significant increase in risk for businesses operating in California ahead of the California Privacy Rights Act’s implementation in January 2023.

The Danish data protection authority has completed an inspection of the Southern Denmark Region with a focus on the processing of personal data in the health research area. It selected three research projects as the inspection subject for “processing basis” and “responsibilities and roles”. The regulator requested a copy of the data processing agreements, documentation for any supervision of the data processors, and the guideline “Conclusion of data processing agreements and supervision of data processors” which was listed on the region’s list of policies. At the end of the revision, the regulator stated that the data controller would not be able to meet the above requirements by simply entering into a data processing agreement with the data processor. The data controller must therefore also carry out minor or major supervision to ensure that the entered data processing agreement is complied with, including ensuring the data processor has implemented the agreed technical and organizational security measures. For instance in two cases the region entered into data processing agreements with three different data processors in 2018 and 2020, and the agreements have not been subsequently updated.

Following a complaint from an employee, the Spanish data protection regulator AEPD punished Muxers Concept 20,000 euros. An audio recording device was found in the corporate locker room hidden behind ceiling tiles, and an alleged video surveillance camera and sound recorder were found in the employee restrooms. Even recording employees’ interactions with clients is considered disproportionate to guarantee compliance with labor laws. All surveillance and control measures must be reasonable to the purpose pursued, which is to provide security and comply with labor rights. As a result, the AEPD determined that Muxers had violated Art.6 of the GDPR by performing data processing without a legal basis.

Meanwhile, the EDPB has published an overview of resources made available by EU member states to the data protection supervisory authorities, (SAs), in the last years – financial and human. It shows that the SAs need more staff to contribute more effectively to the GDPR cooperation and consistency procedures, to educate and to conduct more investigations, especially linked to complaints and security breaches. The SAs need more staff to be able to act more proactively, conduct on-site investigations, and to be able to conduct further examination of the growing number of complaints or breach notifications as only basic processing of them is currently possible in many cases. They also need more resources to develop information systems, increase their national and European communication, and to deal with the new tasks related to evolutions in EU regulations. In some cases, the staff salaries were reported to be too low compared to the salaries of the private sector in the same field.

Data security: data medium destruction, internet-connected appliances, credential theft

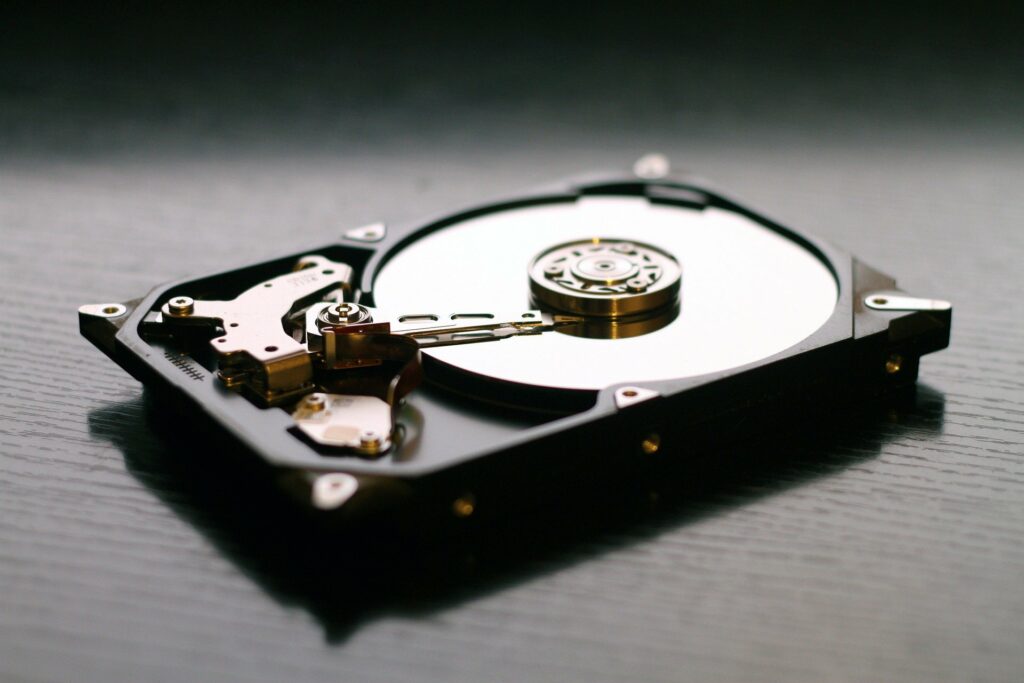

Germany’s Federal commissioner for data protection BfDI published a guide on destroying data mediums, (in German). The destruction of data carriers is a technical and organisational measure to ensure data security, and in particular to prevent unauthorised third parties from gaining knowledge of personal data. The responsible body, following international standards, must first classify the processed personal data, or the data carriers storing them for protection requirement and define appropriate protection classes, (from normal to a very high level). The higher the security level, the greater the effort required for an attacker to be able to restore and take note of the destroyed data carriers or the personal data stored on them. Additionally, there are different specifications for various material supports, (such as paper, microfilm, magnetic hard drives, optical data carriers, and semiconductor memories), that must be observed when destroying a data carrier.

According to a European Commission document seen by Reuters, internet-connected smart appliances like refrigerators and TVs will have to adhere to stringent cybersecurity regulations or face fines or expulsion from the EU. Following high-profile events where hackers damaged businesses and demanded astronomical ransoms, worries about cybersecurity threats have increased. Through September, the EU executives will make its Cyber Resilience Act plan public. Manufacturers will have to evaluate the cybersecurity risks associated with their products and implement the necessary measures. After becoming aware of concerns, the organisations must report events to ENISA, the EU’s cybersecurity agency, within 24 hours and take action to resolve the flaws. Distributors and importers will have to confirm that the goods adhere to EU regulations. National surveillance authorities will have the power to “prohibit or restrict that product from being made available on its national market” if businesses fail to comply.

US cybersecurity expert Brian Krebs looks into how phishers have such incredible success stealing one-time passcodes and remote access credentials from employees using text messages. In one of the examples, a deluge of SMS phishing messages targeting workers at commercial staffing agencies that offer outsourcing and customer assistance to hundreds of businesses started to appear in mid-June 2022. The emails instructed recipients to click a link and log in to a phishing page that looked like the authentication page for their workplace. The one-time password for multi-factor authentication was then requested from those who had already submitted their credentials. The phishers behind this scam sent text messages pushing employees to click on links to freshly registered domains that frequently incorporated the name of the target organization in order to learn details about an impending change in their work schedule. The phishing websites used a Telegram instant chat bot to relay any provided credentials in real-time, enabling the attackers to log in as that employee at the legitimate employer’s website.

Big Tech: UK Children’s code use cases, SpongeBob app vs COPPA, fingerprints in a school WC

The ICO’s groundbreaking Children’s code was fully rolled out in the UK in September 2021, requiring online services including websites, apps, and games to provide better privacy protections for children. Some changes over the past year included:

- Facebook and Instagram limited targeting by age, gender, and location for those under 18.

- Facebook and Instagram asking for people’s date of birth at sign-up, preventing them from signing up if they repeatedly entered different dates and disabling accounts where people can’t prove they’re over 13.

- Instagram launched parental supervision tools, along with features like Take A Break to help teens manage their time on the app.

- YouTube has turned off autoplay by default and turned on take a break and bedtime reminders by default for those under 18s.

- Google has enabled anyone under 18 (or their parent/guardian) to request to remove their images from Google image search results, location history cannot be enabled by Google accounts of under 18s, and they have expanded safeguards to prohibit age-sensitive ad categories from being shown to these users.

- Nintendo only allows users above 16 years of age to create their own accounts and set their own preferences.

In the US, the Children’s Advertising Review Unit, (CARU), has found Tilting Point Media, owner and operator of the SpongeBob: Krusty Cook-Off app, in violation of the COPPA and CARU’s Self-Regulatory Guidelines for Advertising and Children’s Online Privacy Protection. As the operator of a mixed audience child-directed app, Tilting Point must ensure that no personal information is collected, used, or disclosed from users under age 13, or that notice is provided, and verifiable parental consent is obtained before such collection, use, or disclosure. Tilting Point does have an age screen on its app, however it did not prevent CARU from using the app as a 10-year-old child, agreeing to Tilting Point’s terms of service and privacy policy, and consenting to the processing of the data to receive “personalized” advertising. The app’s non-declinable privacy policy and terms of service provide that the user must be at least 13 years old to use the company’s product, but the age gate does not prevent a child from checking those boxes and playing the game.

In Australia, a Sydney high school is requiring students to scan their fingerprints if they wish to use the WC. Some parents say they weren’t asked for consent to take their children’s fingerprints, and one mother has requested that her daughter’s fingerprints be deleted from the system. The education department offered biometric technology to stop vandalism and anti-social behaviour in the toilets. It also stated, “The use of this system is not compulsory. If students or parents prefer, students can also access the toilets during those times by obtaining an access card from the office”. Issues surrounding biometric data and consent have not been extensively tested in the Australian courts. Other schools across New South Wales have used the technology for several years for students to mark their attendance. Yet New South Wales state police cannot conduct forensic procedures such as obtaining fingerprints without a person’s informed consent or court order.