It is undeniable that children (individuals under 18) take up a large portion of the online population. With more content being created to specifically target children, a UK study from Ofcom has shown that many start as young as 3 to 4 years old to consume content on video sharing platforms such as Youtube, and the majority of 8 to 11 years old have a social media account. As a result, these platforms and services are processing vast amounts of children’s data, whether they intend to do so or not.

Due to their age and general level of maturity and education, children are considered to be vulnerable and granted special rights in the eyes of the majority of jurisdictions. This is internationally recognised through, for example, the United Nations’ Convention on the Rights of the Child. This vulnerability is considered across different areas of legislation, including data protection, leading to specific provisions being included in the GDPR, such as Art. 8, laying the conditions for information society services to process children’s data.

Art. 8 GDPR’s requirements and the age of digital consent

Art. 8 of the GDPR is the only article that regulates the processing of children’s personal data specifically. It provides that the processing of personal data of children is lawful when the child is at least 16 years old (age of digital consent), or, if below that age, only where consent has been given by the holder of parental responsibility for said child. The GDPR also allows for the individual member state to independently legislate on whether the age limit can be lower than 16, so long as it is no lower than 13. Countries such as Germany and the Netherlands have opted to stick to the standard already established by the GDPR, while others, including Belgium and the UK prior to its departure from the EU, have lowered the threshold to the lowest possible age of 13. Notably, the UK’s current data protection provision still maintains that the age of digital consent is 13.

With this provision, the inevitable consequence is to first and foremost ensure that the age of a data subject is appropriately verified, in order to assess whether these rules apply and take the appropriate steps. However, recent cases and studies have shown that it is inherently difficult to gain consent of a parent or guardian, as there are no appropriate mechanisms in place to ensure that children are being truthful about their age.

Growing concerns about the processing of children’s data

One of the main issues that information society services face in regards to the processing of children’s data, is that these services are not aware that many of the users are actually under the age of digital consent. So far, the majority of these platforms have been relying on relatively lax forms of self declaration, meaning that the platforms offer services on the legal assumption that the user is responsible for declaring their age truthfully, which leads to users easily lying about their age to gain access to platforms where no extra assurance is required.

UK’s Ofcom research has shown that for platforms such as TikTok and Facebook, which only required users to indicate their date of birth, the vast majority simply indicated a date of birth that would indicate that the user is older than they actually are. The main issue with this is that this may set up young users to be exposed to content that is not safe for their age, and also expose them to unlawful collection of their personal data from these platforms.

It is therefore unsurprising that Meta and TikTok have been the two biggest companies being fined for violations in regards to misuse of children’s data by the Irish and UK’s data protection authorities respectively. In fact, the UK’s ICO noted that TikTok had been aware of the presence of under 13s in the platform but it had not taken the right steps to remove them.

It becomes clear that the development and implementation of more stringent age assurance techniques is necessary to ensure that personal data of children is only processed in accordance with GDPR standards. Whilst the EU is yet to come up with specific guidelines in regards to this matter, the UK has published the Children’s Code, to be applied to online services likely to be accessed by children as a code of practice.

Age assurance mechanisms

Amongst 15 other standards that the Code implements, there is the need to ensure that the product and its features are age-appropriate based on the ages of the individual users. To be able to do so, the code requires that the age of users is established with the appropriate level of certainty, based on the risk level of the processing and taking into account the best interest of the child. Therefore, it is also crucial under the code, to carry out a Data Protection Impact Assessment (DPIA) prior to the processing of children’s data, to evaluate said risk level.

The code suggests some additional age assurance mechanisms that information society services may put in place, and the UK’s children’s rights foundation 5Rights has identified additional ones and its possible use cases, advantages and risks. Some of these include:

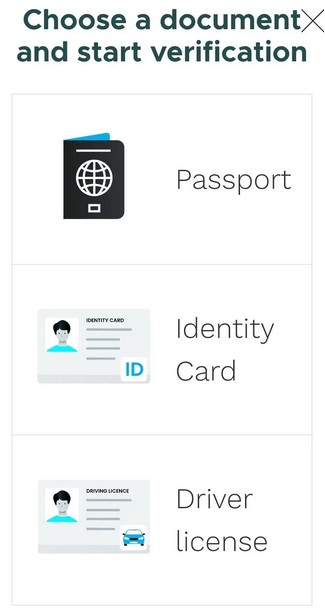

- Hard Identifiers, such as sharing one’s ID or Passport or other identifying information. Those are considered to provide a high level of assurance, but raise concerns in regards to data minimisation and might otherwise lead to a disproportionate loss of privacy. Organizations are generally advised to implement appropriate storage limitation periods for those, limited to what is needed to verify an individual’s age once, making it tricky to demonstrate having checked that information, for compliance. Youtube and Onlyfans are examples of ISS that makes use of this mechanism to give access to age-restricted content.

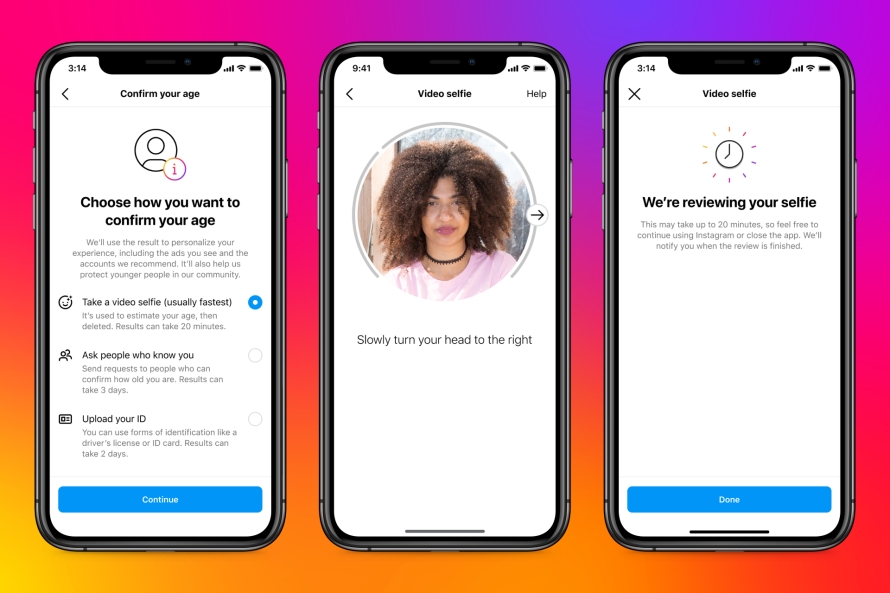

- Biometric data relies on the use of artificial intelligence to scan for age-identifiers on a person’s face, natural language processing or behavioral patterns. It is more commonly used through facial recognition. However, it presents a high degree of risk due to the use of special categories of data, risk of discrimination by biased artificial intelligence and the effective profiling that takes place. Whilst it does provide a high level of assurance, it also requires a very stringent mechanism in place in order to ensure data is processed safely. GoBubble is a social network site made for children in schools that has been using this kind of age assurance technology, by requesting users to send a selfie upon sign up. Meta is also currently in the process of testing this method of age assurance, by working with Yoti, one of the leading age assurance technology developers.

- Capacity testing allows services to estimate a user’s age through an assessment of their capacity. For example, through a puzzle, language test or a task that might give an indication of their age or age range. Whilst this is a safe and engaging option for children, and does not require the collection of personal data, it might not be as efficient at determining the specific age of a user. The Chinese app developer BabyBus uses this type of methodology in its app, by providing a test where users are asked to recognise traditional Chinese characters for numbers.

More examples and use cases of age assurance mechanisms are provided in the 5Rights report.

Therefore, although it may be difficult to strike a balance between appropriately verifying users’ age prior to sign up, and avoiding over-intrusive measures to do so, it is apparent that solely relying on the user being truthful about their age is no longer sufficient for the majority of platforms, especially when processing vast amounts of personal data, sensitive data or use personal data for targeted advertising. With the growing number of very young children accessing the internet, it is important to ensure that they are protected, their fundamental rights respected, and relevant data protection provisions are fulfilled. In recent years, large steps have been made in the development of alternative secure identity and age verification technologies. The tools are therefore available for organizations to ensure that their GDPR requirements are also met in this respect.

TechGDPR is a consultancy based in Berlin offering GDPR compliance assessments, DPO-as-a-service retainers and hourly consulting. TechGDPR consultants help assess the vendors you wish to purchase your solutions from, navigate the complexity of international data transfers as well as guide you through the most compliant roll-out of the solutions you have purchased. TechGDPR routinely trains product development, HR, marketing, sales and procurement teams in understanding data protection requirements. It offers an online training course for software developers, system engineers and product owners.