Self-hosting AI models is the future of privacy and compliance. By hosting AI models on personal hardware, individuals and businesses can improve data security while meeting strict regulations like the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA). Most people use hosted artificial intelligence (AI) services such as ChatGPT by OpenAI or Gemini by Google. These are known as cloud-based AI models and the computation is done on servers operated by the AI providers. Self hosting your AI means that you are the controller of all of the data. Unlike cloud-based AI services, self-hosting ensures that all data remains within the user’s direct control. This significantly reduces the risks of unauthorized access, data breaches, and non-compliance with regulatory frameworks.

What does self-hosting an AI model mean?

To be explicit: if one self hosts AI models, it occurs directly on the hardware they own (i.e. one can run Ollama on their laptop). This control allows for enhanced privacy and security. Arguably, if you host an AI model on your device, there is no need for the data to ever leave your device. Therefore, the risk of data breaches or unauthorized access decreases drastically. If one hosts an AI directly on their device, the data does not need to travel far distance. This means the latency is decreased and one receives a faster response (this aspect of speed is hardware dependent). Latency can best be understood as how much time passes between when a question is asked to an AI model and when a response is received.

Most modern computers can run smaller AI models with no issue, but larger models tend to be more resource intensive. There are many resources available that allow one to examine the free open-source models and the hardware compatibility. The benefits to using an open source model can be greater privacy and transparency. The decreased latency also allows for reduced risks of data breaches and a better level of compliance if processing sensitive data using AI models.

Why and how to invest in self-hosting AI models?

To run usable AI models, hardware plays a crucial role. Self-hosting AI models require a graphical processing unit (GPU) for optimal performance, as running AI solely on a central processing unit (CPU) leads to slower computations and, as aforementioned, higher latency.

What are the key benefits of self-hosting AI models:

- Improved Performance: GPUs significantly enhance processing speed, allowing AI models to generate responses faster.

- Cost Savings Over Time: While the initial investment in hardware may be high, self-hosting eliminates recurring cloud subscription fees—leading to long-term financial benefits.

- Data Control & Privacy: Self-hosting removes dependence on third-party cloud providers, ensuring full control over sensitive data.

- Regulatory Compliance: Self-hosting reduces the risk of breaches and helps meet strict regulations like the GDPR and the HIPAA.

- Avoids External Policy Changes: Cloud-based AI providers frequently update pricing models, governance rules, and data policies. Self-hosting AI models provide stability and predictability in data management.

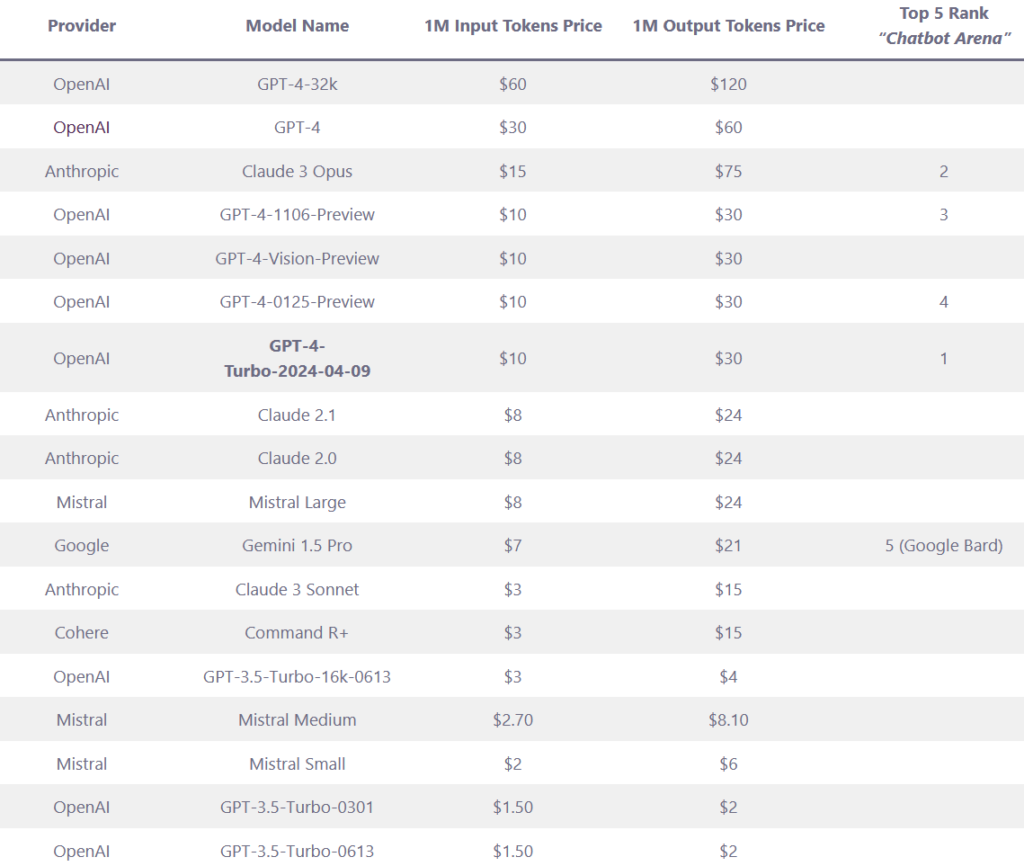

- Eliminates Token Costs: Using AI services from major providers (e.g., OpenAI, Google) requires purchasing tokens, making usage costs unpredictable. Self-hosting avoids reliance on fluctuating pricing. As demonstrated in the included chart, these prices are ever fluctuating and the cost of using AI that is not self-hosted is that one is at the whim of the cost dictated by the service provider.

By investing in local AI infrastructure, businesses and individuals regain autonomy over AI processing, ensuring cost efficiency, data privacy, and long-term stability. Investing in the hardware means that one is not at the whims of the service provider for your virtual cloud instance. It allows for complete control over the data and for an eventual decrease in the amount of money self-hosting AI costs.

How can using self-hosting AI help with regulatory compliance?

Self-hosting AI models is a crucial step toward ensuring compliance with data protection regulations such as the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA), while also reducing reliance on big tech companies. Under Article 9 of the GDPR, sensitive personal data, such as health information, biometric data, and racial or ethnic origin, requires strict protection and cannot be processed without explicit consent or a lawful basis. By self-hosting AI models, organizations retain full control over such data, minimizing the risk of unauthorized access and third-party breaches.

Studies have shown that developing AI models within institutional boundaries, particularly in healthcare, enhances privacy and regulatory compliance. It allows for more ethical and secure AI deployment. Furthermore, reliance on centralized AI models controlled by major corporations raises concerns about monopolized access to data. This can potentially leading to biased decision-making and limited transparency. Self-hosting AI fosters greater ethical responsibility, ensuring that data governance aligns with user interests rather than corporate agendas.

Case study: Deepseek

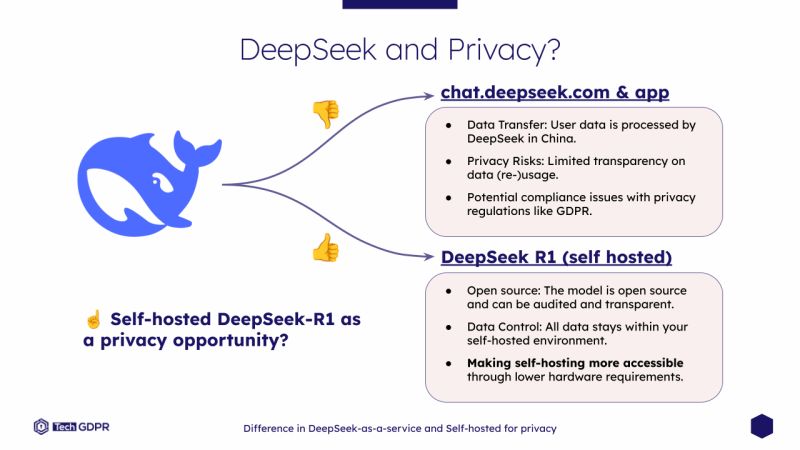

In the beginning of 2025, there was a huge shock in the AI sphere with the introduction of DeepSeek R1. DeepSeek, a Chinese startup, was able to create and train an open sourced AI model for a fraction of the cost of its competitors. It is free to download and use. Since DeepSeek is based in China, there were growing concerns about using chat.deepseek.com or the application because of where the data is sent. However, if one is to host DeepSeek R1 the data is not sent anywhere the controller. Running DeepSeek as a self-hosted AI model is a simple and cost-effective way to explore the benefits of self-hosted AI, including privacy, performance, and cost savings.

Why is DeepSeek good for privacy?

But, do self-hosted AI models perform worse?

Short answer: No. A Swiss study showed that using a small local Deep Neural Net (DNN) alongside a remote large-scale AI model can help reduce the prediction cost by half without affecting the system’s accuracy. Essentially in 2022, Chat GPT-3 models cost $0.48 per request. The study worked by putting the input to a local hosted DNN for a response. If the response was trustworthy, the response was not forwarded to the GPT. If the output was not trustworthy, the GPT would need to compute the response. The local DNN was able to generate a correct prediction or response for 48% of the input needed and lost very little accuracy. Self-hosted AI models are able to save money for individuals. This is done by saving tokens and avoiding expensive calls with very little loss in terms of accuracy.

Why should businesses adopt self-hosting AI?

In a world where AI is increasingly intertwined with daily life, the decision to self-host AI models offers a powerful alternative to cloud-based solutions. By self-hosting AI models on personal hardware, one can improve:

- Data Security: Eliminates external risks by keeping information in-house.

- Regulatory Compliance: Easier to meet industry-specific privacy laws.

- Cost Efficiency: Reduces long-term expenses related to cloud computing and API usage.

- Customization & Flexibility: Empowers users to fine-tune models to their specific needs, ensuring greater transparency and understanding of how AI systems operate.

- Improved Performance: Faster response times and reduced latency lead to better user experiences.

With advancements in open-source models like DeepSeek R1, running self-hosted AI models is more accessible than ever. This allows users to benefit from high-performance models without sacrificing privacy or autonomy. As AI continues to evolve, self-hosting AI models stands as a viable and increasingly necessary choice for those who prioritize control, security, and ethical responsibility in their AI usage.